pgpool-II 3.5 features

Contents

Introducing pgpool-II 3.5 new features

pgpool-II 3.5 is enhanced in many areas as previous major releases of pgpool-II. However this time pgpool-II enhances the performance in some use cases and this kind of changes are pretty rare in the pgpool-II history. Let's discuss performance of extended query protocol first.

Improved performance in extended query protocol

Extended query protocol is used in many applications written in various languages and APIs including Java and JDBC. One of the benefit of it is, it allows to reuse query plans to save planning time. The other benefit is it could make queries more secure. The extended query protocol allows to use parameters for query. Without this, applications need to generate query string by themselves and may introduce famous SQL injection attack.

Until pgpool-II 3.4, unfortunately it is not good at handling the extended query protocol. It is very slow when the extended query protocol is executed through pgpool-II.

Why extended protocol is slow anyway?

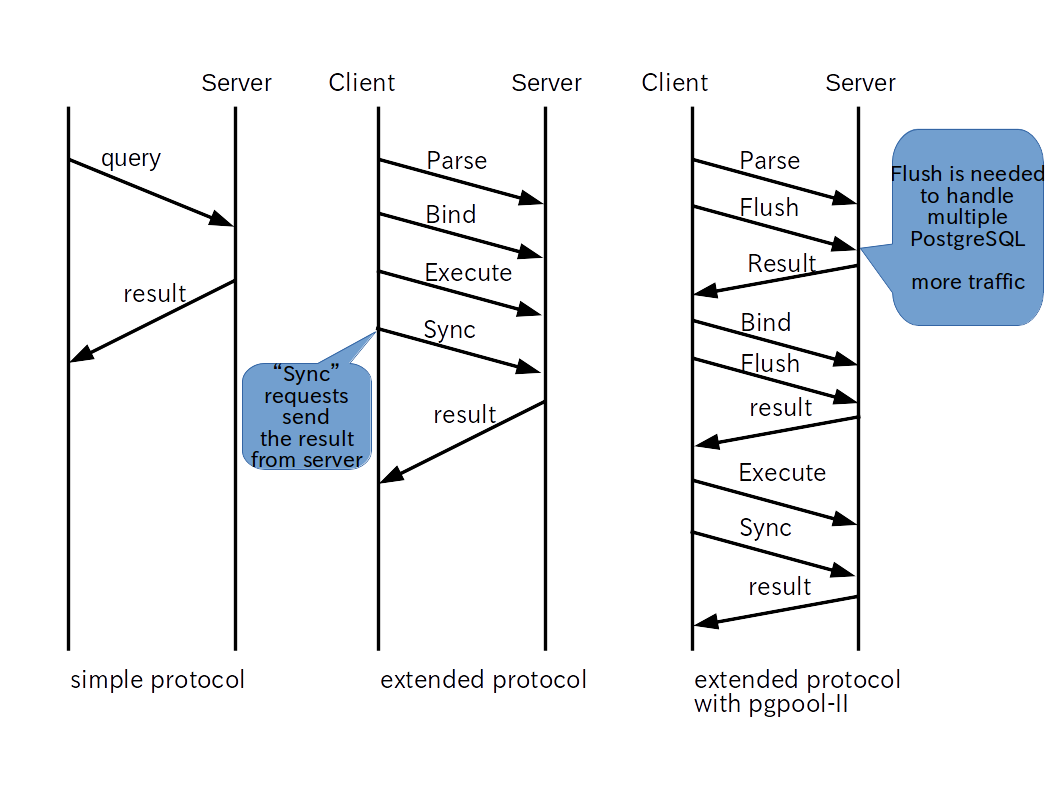

In the extended query protocol, queries are divided into several phases: Parse, Bind, Execute and Sync (there are more message types in the protocol. See PostgreSQL docs for more details). Parse message requests to create query plans. Bind message requests to attach parameters to the plan. Execute message requests to execute the query. Note that in each phase PostgreSQL does not respond the each result in the each phases. Rather, all of the responses are replied back to clients (including pgpool-II) at once.

However, pgpool-II needs to synchronize backend responses for internal processing. This is done by issuing additional Flush message in the each protocol phase, which requests response from backend. Problem is, this generates significant amount of network traffic and cause slowness of query execution through pgpool-II.

Overcoming the slowness

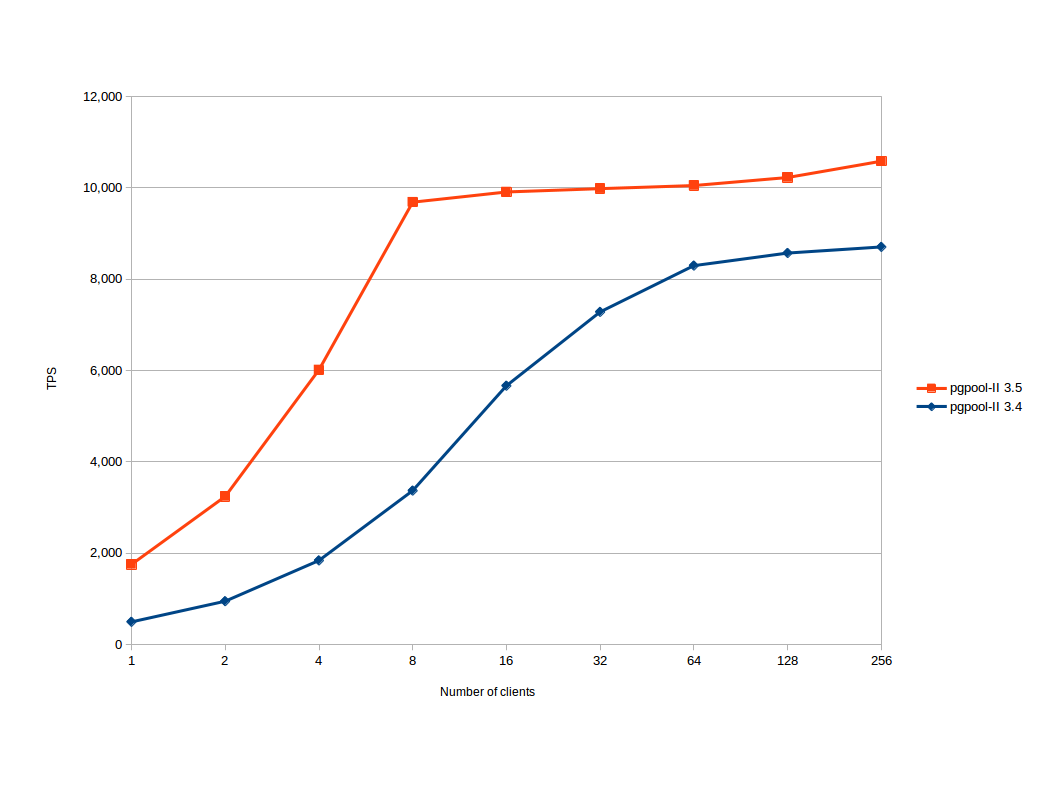

Pgpool-II 3.5 overcomes the weakness in some cases. In streaming replication mode, because pgpool-II does not need to sync each backend, pgpool-II 3.5 does not issue flush messages. This was not an easy project because pgpool-II's architecure prerequisite that backend responds each time when extended protocol messages was sent. The hard work is rewarded by significant performance improvement. Bench marking shows that pgpool-II 3.5 is 20% to 250% faster than 3.4.

The performance of other mode (native replication mode and raw mode) remains same.

Overcoming the thundering herd problem

Pgpool-II has a problem called the thundering herd problem.

What is the thundering herd problem anyway?

The Wikipedia says:

The thundering herd problem occurs when a large number of processes

waiting for an event are awoken when that event occurs, but only one process is able to proceed at a time. After the processes wake up, they all demand the resource and a decision must be made as to which process can continue. After the decision is made, the remaining processes are put back to sleep, only to all wake up again to request

access to the resource.

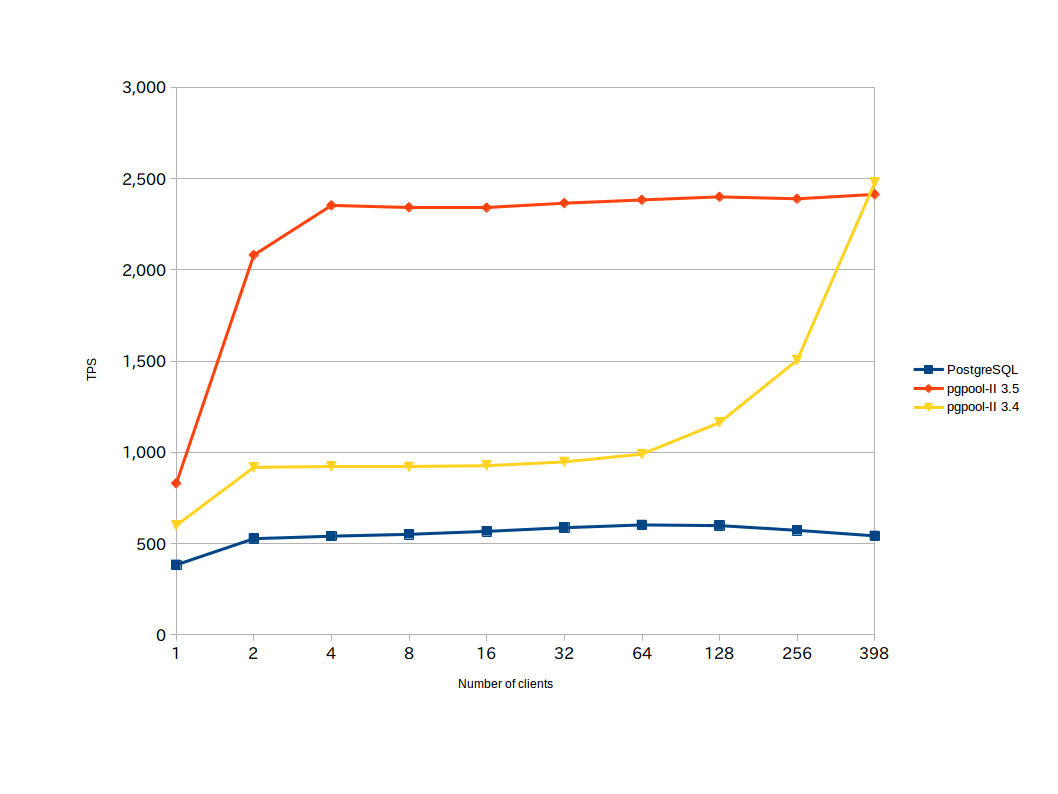

Pgpool-II forks off child process and wait for connection requests from clients. When a request arrives only one of them can accept the request. Other process just are awoken and sleep again. Pgpool-II 3.5 allow only one process to accept the request by using semaphore and never let other process awoken except the process which had been waiting for the semaphore. This eliminates the thundering herd problem. To enable the functionality, you must turn on the new parameter serialize_accept in pgpool.conf. Also child_life_time must be disabled (set to 0), which is due to an limitation of current implementation. Note that the method is most effective when num_init_children is large and the number of concurrent clients is relatively small. In our test case, pgpool-II 3.5 is 40% to 150% faster than 3.4.

The graph left shows how pgpool-II 3.5 putperforms 3.4. We set everything (pgpool-II, PostgreSQL and pgbench) on a laptop which has 16GB mem and 512 GB SSD, running Ubuntu 14.04. We start pgbench with -C option which repeats connect and disconnect to pgpool-II to simulate the thundering herd problem. Note that the blue line shows raw PostgreSQL TPS for comparison. pgbench repeatedly connects and disconnects to PostgreSQL, which results in poor performance. This shows why we need connection pooling.

Importing PostgreSQL 9.5 parser

Pgpool-II has a built-in SQL parser which is used for mainly query dispatching and load balancing. The parser is being imported from PostgreSQL. Pgpool-II 3.4's SQL parser was imported from PostgreSQL 9.4 and does not understand new syntax employed in PostgreSQL 9.5. Those queries the parser does not know are sent to the primary node and are never load balanced, which makes pgpool-II less effective when it needs to handle those queries.

Pgpool-II 3.5 imports the SQL parser from PostgreSQL 9.5 and it can understand new syntax introduced in PostgreSQL 9.5. Especially GROUPING SET, CUBE, ROLLUP and TABLESAMPLE, now can be be load balanced and can be used in query cache. Of course pgpool-II 3.5 understands PostgreSQL syntax used in 9.4 as well.

Also INSERT...ON CONFLICT and UPDATE tab SET (col1, col2, ...) = (SELECT ...) ... can now be properly handled in query rewriting in native replication mode.

Other new features

Some PassS systems including Heroku does not allow to use systems databases, i.e. postgres and template1. This prevent pgpool-II from using such that PassS. New parameters health_check_database and sr_check_database allow to specify the database used for health checking and the database used for streaming replication delay respectively.